Azure Cosmos DB is a database service from Microsoft Azure the aim of which is to provide users with a globally distributed, scalable, multi-model database.

In a nutshell, it is a service that can provide “unlimited” capacity, and also supports multiple-model databases that allow us to access and query data using APIs for SQL, MongoDB, Cassandra, Gremlin, Etcd, and Table. We are not going to spend any time discussing these multi-model databases here, but you can click the link if you are interested in how to store and query data with those different API model.

In this article, I would like to share some tips for developers on how to get the most out of your Cosmos DB.

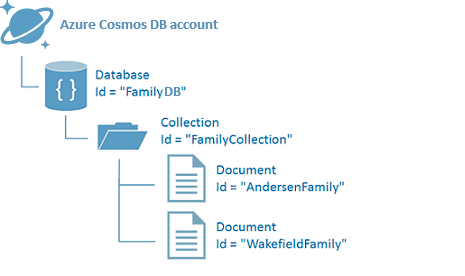

We use Cosmos DB to store and query JSON documents. CosmosDB organizes data into databases, collections, and items as shown here:

Each Item in a collection is identified with a pair of Partition and Row Keys.

Tip 1: Plan your partition

Cosmos DB provides unlimited capacity, providing that you have a partitioning scheme that allows Cosmos DB to scale. When we create a collection, we can choose the storage capacity. There are 2 options:

- Fixed Storage Capacity 10GB

- Unlimited

If your data is projected to grow beyond 10GB, then you need to choose the second option.

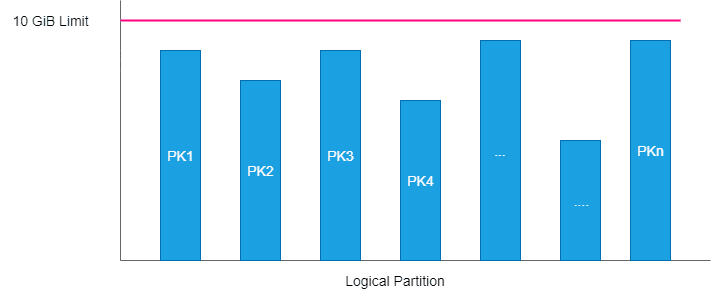

The unlimited storage mode requires us to define a partition key. Think of a partition like a bucket where you store your data. Each bucket is limited to 10 GB, but you can have as many as you need and Cosmos DB will manage where to actually store the data. This is how unlimited storage capacity is achieved.

Whatever partition key you choose, you need to ensure that it will distribute data evenly across partitions and allow your data to grow. Often you need to derive a partition key from 2 or more data properties.

Tip 2: Stay below the 10GB Logical Partition Limit

Decide if you are likely to hit this 10GB Logical Partition limit. If so, then you need to redesign your partition key. Let me give you an example. We have data from different customers. Each customer has an id. Initially, we thought it was a good idea to use the customer id as the partition key. But as we discovered subsequently, that was actually a bad idea. As customer data kept growing, it finally reached the 10GB limit. Everything in production for the impacted customers came to a halt, and we needed to redesign the data partitioning to avoid the “hot” partition. A hot partition is a partition in which your application will keep adding data over time We also needed to migrate the data to the new data scheme, which was a painful process. The lesson for us was that we wanted to avoid creating any hot partitions. As a rule of thumb, we found that it is generally better to have many small partitions rather than a few big partitions.

Tip 3: Plan your collections

Throughput can be allocated to either Database or Collection level. Throughput allocated to Database is shared across all collections in the database. This is also called shared throughput. You can have as many as collections you need, but you do need to be aware that for each new collection you add to the Database, Azure will increase the minimum provisioned throughput by 400RUs.

Alternatively, throughput allocated to collection is called dedicated throughput and is set to a minimum of 400RUs.

The more collections you have, the more throughput you need to allocate – this increases cost.

Tip 4: Always use a partition key in data operations

One thing often overlooked is the fact that Cosmos DB charges you for every operation (read and write). For every 1 KB you read with the partition key and row key you will be charged 1 Request Unit (RU). The cost of writing the same size of data is 4RU.

Data operations forced across partitions will cost you significantly more. Always aim to do data operations with specific partition and row keys to avoid cross-partition scans.

Tip 5: Allocate enough throughput

One of the indications that you might not have provisioned enough throughput is you often get Response 429 (Rate-Limited) from the Cosmos DB database. If this is the case review your code and make sure requests are optimised (see Tip 4 above). If necessary, increase database throughput to a reasonable level to match your performance requirements.

Author:

Anang Prihatanto – Analyst