Background

Modern software must be both responsive and scalable, to ensure that applications can handle increasing loads and real-time demands. Achieving efficient concurrency is a challenge in many programming languages due to the complexity and risk of errors.

Go, however, was designed with concurrency as a fundamental feature. Its Goroutines feature is a lightweight thread, managed by the Go runtime, to make it straightforward to write concurrent code that is both simple and efficient. Goroutines allows developers to manage multiple tasks at once without the heavy resource cost of traditional threads, making Go ideal for use cases using of microservices or real-time systems.

Objectives

Concurrency is at the heart of Go’s design. This article explains what Goroutines are, their role in enabling concurrency, and why they are so effective for modern computing. We’ll also discuss their features, and the challenges developers may encounter.

What is a Goroutine?

A Goroutine is a function that runs independently alongside others. You start one by using the “go” keyword before a function call. Because Goroutines are much lighter than traditional threads, it’s possible to run thousands or even millions of them in a single program.

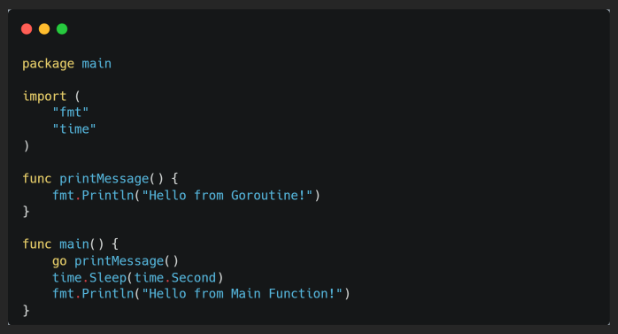

Example:

In this simple example, printMessage() runs concurrently with the main function:

Communication Between Goroutines: Channels

Concurrency is only useful if Goroutine can coordinate safely. Go uses channels to let Goroutines send and receive data without sharing memory directly.

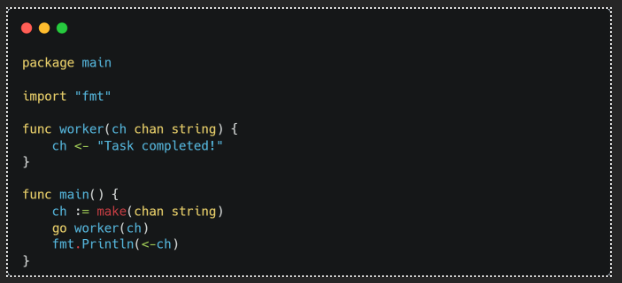

Example:

Here, the main function receives data from a goroutine via the channel, ensuring safe communication

When to Use Goroutines

- I/O-bound tasks:

- API Calls

- Database Queries

- File Operations

- Real-time systems:

- Chat Applications

- Live dashboards

- Game servers

- Data Processing:

- CSV/JSON Parsing

- Image resizing

- Bulk email sending

Goroutine’s implementation in a User Dashboard.

The Problem: Slow Data Aggregation.

Imagine building a user dashboard that needs:

- Profile information

- Order history

- Notification preferences

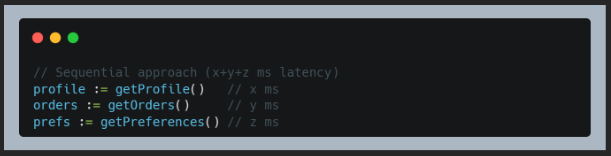

Fetching all these data sequentially is slow:

Total latency = x+y+z milliseconds.

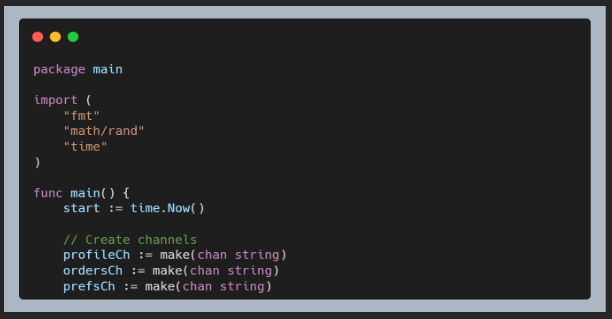

Goroutines: Lightweight Concurrency:

With Goroutines, the total latency could be improved because the program will run each element concurrently,.

Key advantages:

- 2KB memory per goroutine (vs 1MB for Java threads)

- Automatic scheduling across CPU cores

- Simple syntax: just add go before function calls

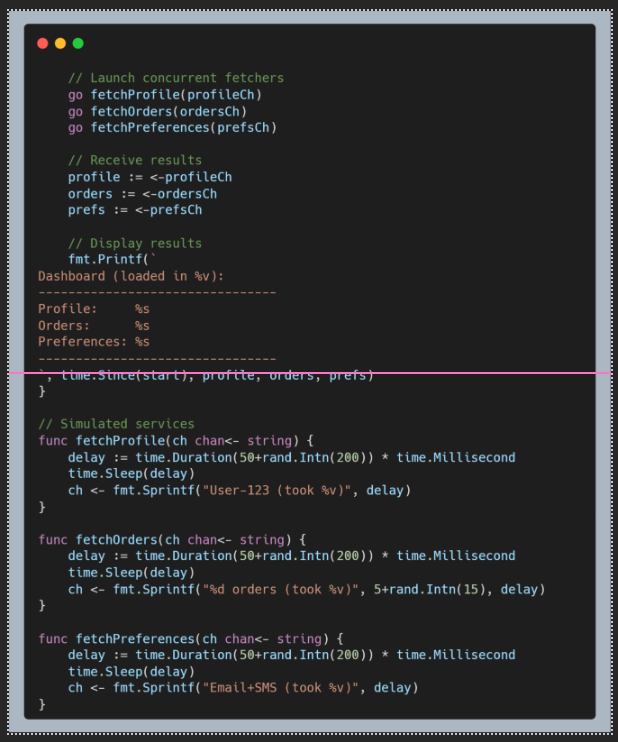

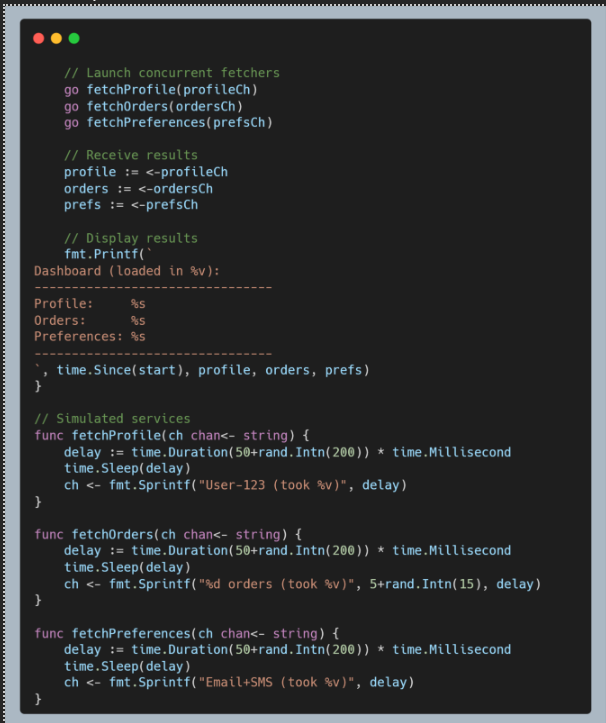

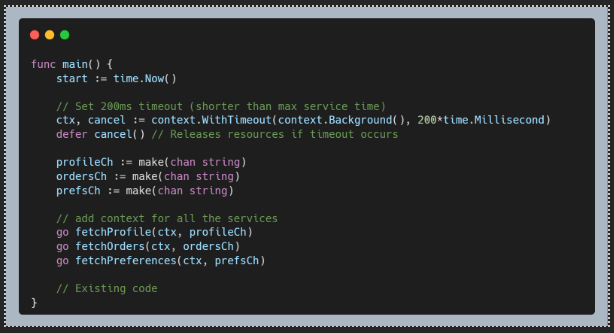

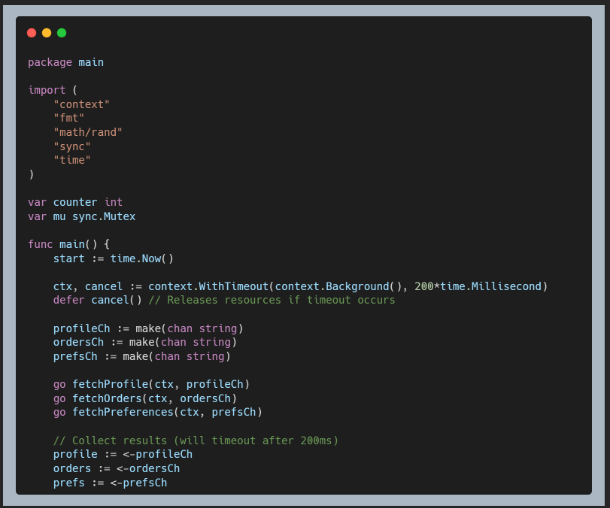

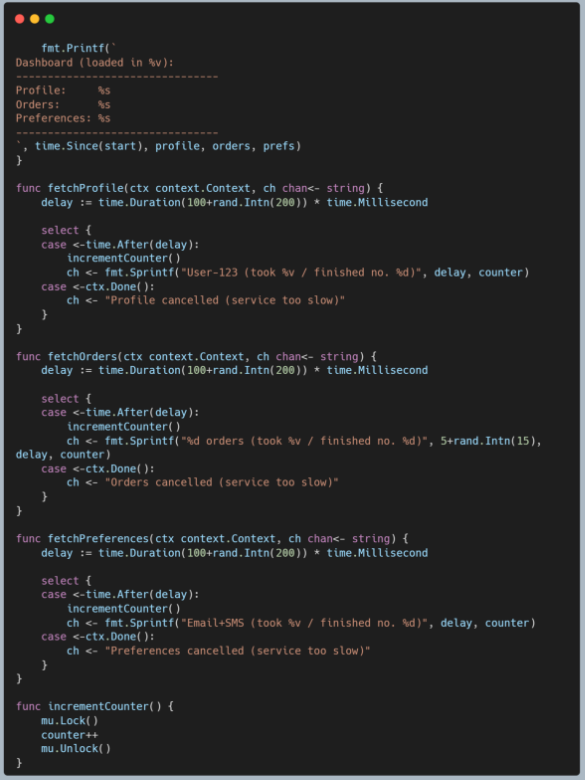

This simple example shows how we can easily use the “go” command to fetch all the data into dashboard concurrently:

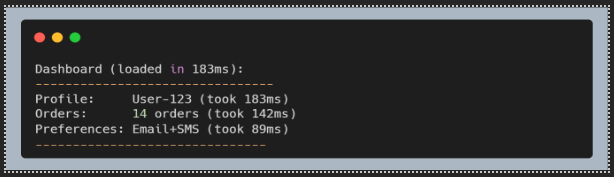

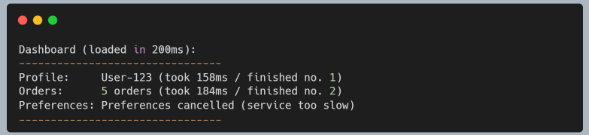

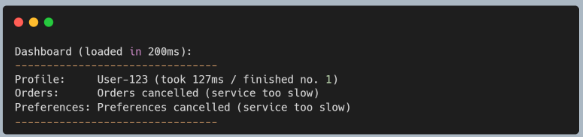

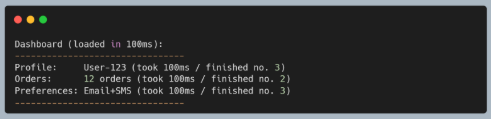

From the output below, we can see that the Total latency ≈ slowest service time.

Concurrency Challenges in Go

Goroutines are powerful, but they come with challenges:

- Race Conditions: When multiple Goroutines access shared data causing conflicts.

Solution: Use synchronisation tools like sync.Mutex or Go’s race detector.

- Goroutine Leaks: When Goroutines are never terminated.

Solution: Use context cancellation and manage lifecycles properly.

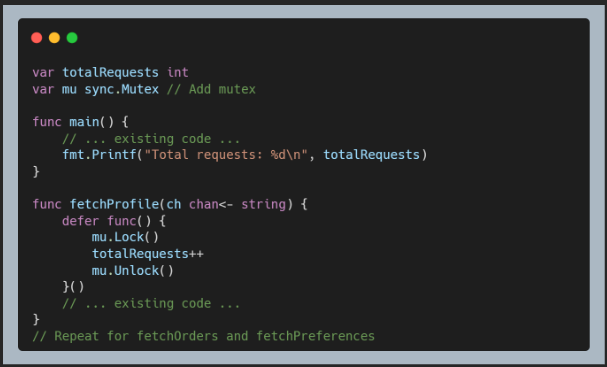

- Preventing Race Conditions (Shared Counter)

Problem: Multiple Goroutines updating a shared request counter.

Solution: Add sync.Mutex to protect shared state:

- Preventing Goroutine Leaks (context cancelled)

Problem: Preferences service might run indefinitely.

Solution: Use context for cancellation:

Now, let’s implement the solution for the problems to our code, below is the updated code.

Sample output:

From the sample output above, we can see that we have already solved the Goroutines leak by cancelling the Goroutines by context timeout, so that even if one of the services is taking too long, the other services won’t be hindered.

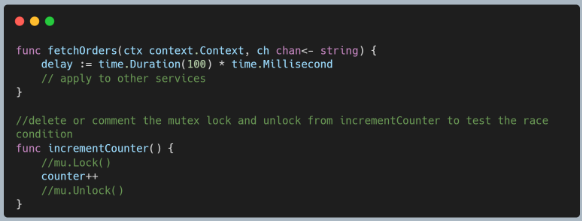

To check the race condition, we can test it by making the delay static, for example as below:

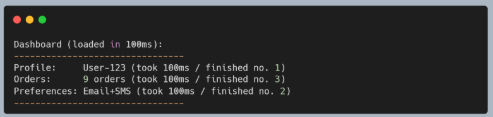

Sample output:

- Without mutex

- With mutex

From the sample above, we can see that without using mutex, if the services run at the same time, the shared variable may experience a race condition.

Why Goroutines Matter in Modern Applications

Goroutines make it easier to design scalable systems. Modern applications-especially servers and distributed systems-often need to handle many tasks concurrently. Traditional threading models can be heavy and complex, while Goroutines provides:

- Lightweight concurrency

- Low memory usage

- Efficient scheduling by the Go runtime

These features help developers build responsive, concurrent applications with less complexity.

Conclusion

Goroutines are a key reason for Go’s popularity in modern computing. Their lightweight design, simple syntax, and built-in synchronisation tools like channels make them practical for building scalable, responsive systems.

Benefits:

- Efficient concurrency with minimal overhead

- Cleaner code compared to traditional threads

- Scalable for handling many tasks at once

- Safe communication via channels

Trade-offs:

- Requires careful management to avoid race conditions and leaks

- Debugging concurrent code can be challenging

- Incorrect channel usage can cause deadlocks

Go’s approach to concurrency offers both power and simplicity. As systems grow more complex, mastering Goroutines will help you build reliable, high-performance software. For deeper understanding, explore Go’s sync, context, and runtime packages.

References

- Cox-Buday, K. (2017). Concurrency in Go: Tools and Techniques for Developers. O’Reilly Media.